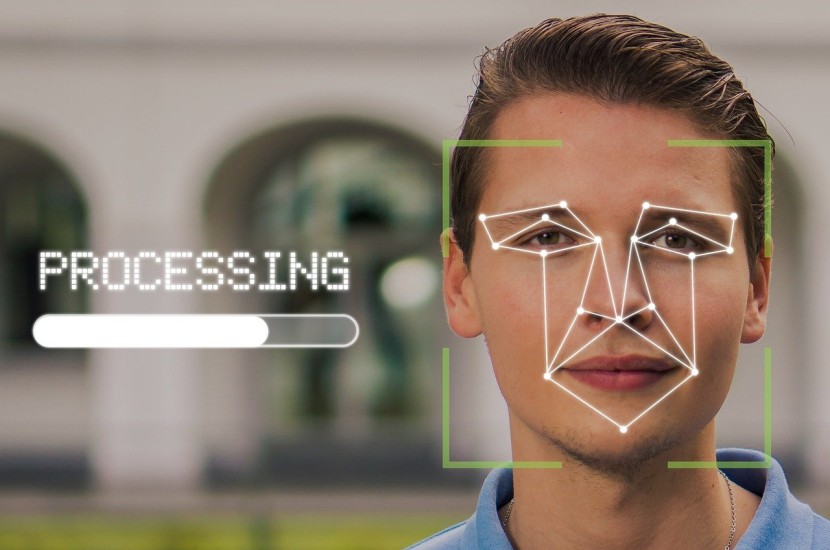

One of the hottest and more interesting technologies to leap from the pages of comics to our day to day reality is facial recognition. It is also one of the most controversial technologies we have dealt with in some time. It has the distinction of being one of the most misunderstood technologies among the non-tech crowd - in other words, normal people.

Right now, there is a groundswell of anti-facial recognition activism. President Biden is being urged to ban facial recognition technology to protect online privacy. Some of it is due to the fact that people tend to fear what they don't understand. But that is not all that is driving the anti-facial recognition movement.

Though perfectly legal, many people have a strong aversion to having their picture taken in public without consent. They fear a loss of agency and autonomy. There is also a civil rights angle to be considered. Facial recognition has not always been applied in an even-handed way. Are there legitimate concerns? Or is it much ado about nothing? Let's Explore:

What If You Change Your Face?

Most people have no idea how facial recognition software does the actual recognizing. One positive use of facial recognition could be allowing keyless entry into an office building. However, what happens if you have a procedure done on your face that changes the shape and geometry of your eyes and nose? You might find yourself locked out of your office building, or your smartphone.

If the images on file show you with misaligned teeth, what happens when you go for the best invisible braces money can buy and subtly alter your facial geometry? In theory, it shouldn't matter because good software is looking at numerous points of data and not just the geometry of your eyes, nose, and mouth.

That said, not all facial recognition tech is created equally. If the software used in your small town is not up to the same standards as that used by the FBI, you might find yourself a suspect for a crime you didn't commit. It doesn't mean you would be convicted, and if convicted, that it would sand. But it could mean a bad week and some unscheduled time off work - oh, and legal fees. All of that headache could potentially happen for no better reason than you decided to get a brow lift.

What If the Software Is Racist or Sexist?

First, software cannot be racist or sexist. But there can be more facial recognition errors for women and people with darker skin. The real question is how the software came to be this way. The answer might reveal some hidden and systemic racism and sexism. The algorithm can only be as good as the training data it is given. Since software coding is predominantly a white male's game, the available test data is predominately white and male. The software simply doesn't have as much experience with any other demographic.

While efforts are underway to correct this problem, facial recognition is going to favor white males and disenfranchise people of color. They will be the victims of false recognition far more often. In its current form, people rightfully fear that facial recognition will further diminish civil rights and promote unequal injustice against minorities.

Will the U.S. Become a Surveillance state?

In some ways, this is the least interesting concern because the U.S. is already a surveillance state. We have surveillance cameras everywhere. The police already have access to surveillance footage of high-profile locations. There are cameras on traffic lights. There are cameras in grocery stores. And software already tracks our every movement on the internet.

Facial recognition has had many fits and starts. But none of that matters. It is already here, and more is on the way. We are already having to deal with bad software, racial discrimination, and privacy invasions. This train will not be stopped. The best we can hope for is that big tech works out the bugs so that it is applied as judiciously and fairly as possible.

© 2026 HNGN, All rights reserved. Do not reproduce without permission.